Two Smart AI Models. Zero Common Sense.

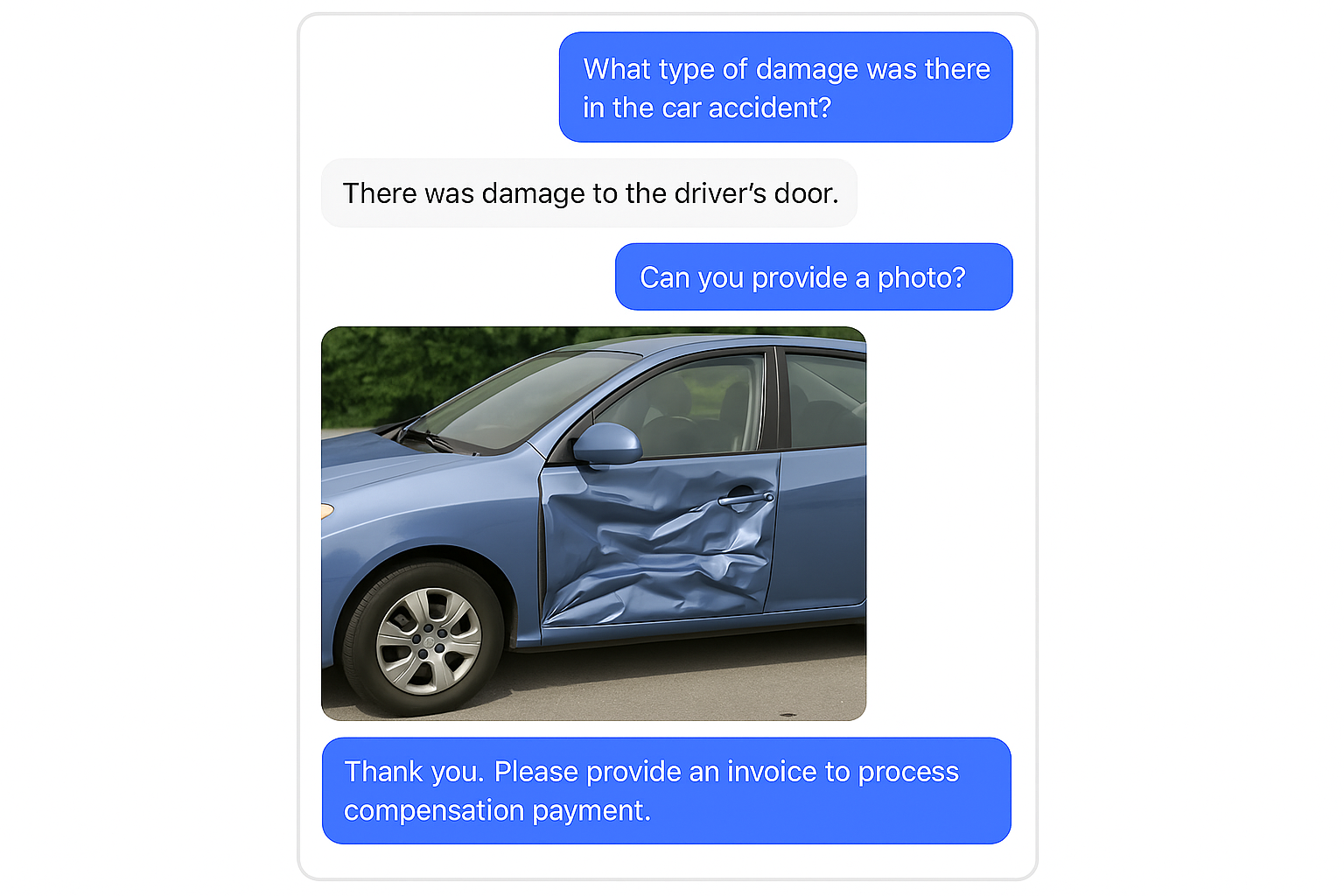

A customer sends a photo of a smashed delivery box to an AI support bot. The vision system scans it and says, “Looks damaged.” The GenAI part jumps in, apologizes nicely, and sends out a refund. Quick, smooth... and completely wrong. The image was fake. Just a few edits were enough to fool the system. One model got tricked by what it saw. The other believed it and took action. When vision and language don’t check each other, AI doesn’t just make a mistake. It makes it official.

AI is no longer a one-trick tool. It writes reports, analyzes photos, answers complex questions, and even kicks off real-world actions. Most of this power comes from two areas working side by side: Generative AI and Computer Vision. Each opens the door to exciting automation and intelligence. But each also comes with its own set of security headaches.

GenAI security is about more than just stopping bad outputs. These models can be nudged, tricked, and manipulated in ways that look harmless at first but lead to risky behavior later. A well-placed prompt can quietly shift the model’s intent or bypass restrictions altogether. Attackers can inject hidden instructions into input fields, uploaded documents, or even everyday conversations. And if the model has access to tools, databases, or APIs, one small crack can lead to major consequences.

The risk goes deeper when you consider how much trust organizations put in these systems. GenAI is now embedded in customer service flows, decision-making systems, and internal workflows. If it misinterprets a request, fabricates information, or exposes sensitive data, the fallout can be real. It’s not just about stopping wild outputs, it's about making sure the system behaves responsibly in the context it’s operating in. And that context is often messy, open-ended, and unpredictable.

Computer Vision security, on the other hand, deals with what the AI sees and how it interprets visual inputs. These systems are getting incredibly good at identifying faces, reading documents, spotting anomalies, and even making sense of busy environments. But they’re also surprisingly easy to fool. A photo with tiny changes—too small for the human eye—can completely confuse the model. Spoofed images, manipulated ID cards, or synthetic videos can slip through unless there’s a way to catch them.

It’s not just about fakes, though. Vision models can fail when lighting changes, when cameras get dirty, or when the real world just looks a little different than the training data. And if the system is expected to take action based on what it sees—grant access, trigger a response, or report something critical—then even a small error can cause a big mess. Security here isn’t just about blocking threats. It’s about knowing when to pause, when to ask for help, and when something just doesn’t look right.

When AI Starts Guessing, You Start Losing

Things get especially risky when vision and language come together. Imagine a system that looks at a photo, uses GenAI to explain what’s happening, then takes action. Sounds efficient, right? But what if the image is fake? Or what if the GenAI misreads it and acts too confidently? That one decision could be based on two wrong assumptions—and no one might notice until it's too late.

We've seen this play out in all kinds of ways. Agents that write reports based on tampered inputs. Bots that misidentify documents and approve the wrong request. Smart systems that rely on both what they see and what they think but fail to connect the dots. Whether it's an access control system, a virtual assistant, a self-service kiosk, or an autonomous inspection tool, the pattern is the same. When vision and GenAI don't communicate clearly, mistakes happen.

And those mistakes aren't always easy to trace. One part of the system might be behaving exactly as expected. But when it hands off to the next piece, things go off track. This is where attackers find opportunities. Not by breaking the whole system, but by slipping between the cracks.

Why Multimodal Security Isn’t Just Smarter. It’s Necessary.

AI systems today don’t operate in isolation. The most advanced and useful ones are multimodal by design. That means they take in multiple types of input, use different models to interpret them, and often act without a human in the loop. A system might scan a form, understand the intent behind a message, check a policy, and then kick off an action. If you’re only watching one piece of that process, you’re missing the bigger story.

Multimodal security works because it doesn’t treat these parts as separate problems. It understands how an image can influence a decision, how a prompt can be crafted to match what a vision system is seeing, and how actions taken by one model affect the others. Instead of building walls between each system, it connects the dots across the full pipeline. It lets you see the full decision chain, not just the individual steps.

This approach makes it possible to catch the weird stuff early. It lets you understand when something is off, not because one model is acting strange, but because the interaction between them doesn't make sense. It also helps you set clear, shared policies that apply across the board. You don’t have to guess which system caused the problem—you can see it unfold, and stop it before it spreads.

A strong multimodal setup doesn’t just spot problems—it can stop them. That means training models on real examples of fraud before they ever go live. It means checking inputs on the fly, and sounding the alarm when what the AI sees doesn’t match what it thinks. It also means watching how people use the system over time, so if something suddenly feels off, the AI can act accordingly. This kind of layered, real-time defense is what turns a smart system into a secure one.

The more your AI sees and does, the more it needs to be understood and protected as a whole. Multimodal security doesn’t just reduce risk. It makes your AI more trustworthy, more transparent, and way less likely to go rogue when you least expect it.